Developed artificial human models successfully replicate cardiac rhythms.

Breaking News: Deepfakes just got a major upgrade, thanks to a team of brilliant scientists from Humboldt University, Berlin! These researchers have mastered the art of creating digital human twins that can mimic realistic heartbeats and subtle changes in facial color. This means that deepfakes are now more convincing than ever before. The groundbreaking results of their study can be found in the journal Frontiers in Imaging.

Deepfakes, a combination of 'deep learning' and 'fake', are digital images or videos where a person's voice or appearance is manipulated using artificial intelligence. These videos can visually replicate someone's appearance, expressions, and speech, making them extremely realistic.

In a fascinating experiment, the researchers developed a detector that automatically determines pulse frequency from videos. They tested the algorithm using 10-second deepfake videos featuring faces. The new system's accuracy was astounding, as the pulse readings differed from real data by only two to three beats per minute when compared to electrocardiography results.

With such remarkable advancements in deepfake technology, it's clear that they have the potential to revolutionize various industries. Potential applications include entertainment, apps for altering one's appearance, and healthcare, where remote patient monitoring using webcams could become a reality. However, the increasing realism of deepfakes also raises concerns about their potential for manipulation, misinformation, and fraud online.

In the entertainment industry, high-quality deepfakes could disrupt authentication systems used in media production and celebrity likeness management. As a result, there's growing demand for more robust detection tools. In the world of finance and social media, enhanced deepfake detection is crucial to prevent identity verification breaches and impersonation. In the healthcare sector, misuse scenarios involve the creation of fraudulent medical imagery or AI-generated misinformation.

In an effort to counter the risks associated with deepfakes, recent legislative efforts in the U.S. include the Tools to Address Known Exploitation by Immobilizing Technological Deepfakes on Websites and Networks Act and the intimate deepfakes bill, which target non-consensual explicit content. These measures complement broader legislation, like the EU’s AI Act, that emphasizes accountability for high-risk AI applications. It's clear that the rise of deepfake technology is triggering a wave of regulatory, technological, and educational responses to combat misinformation, manipulation, and fraud risks. So, buckle up, folks! The world of AI is about to get even more fascinating.

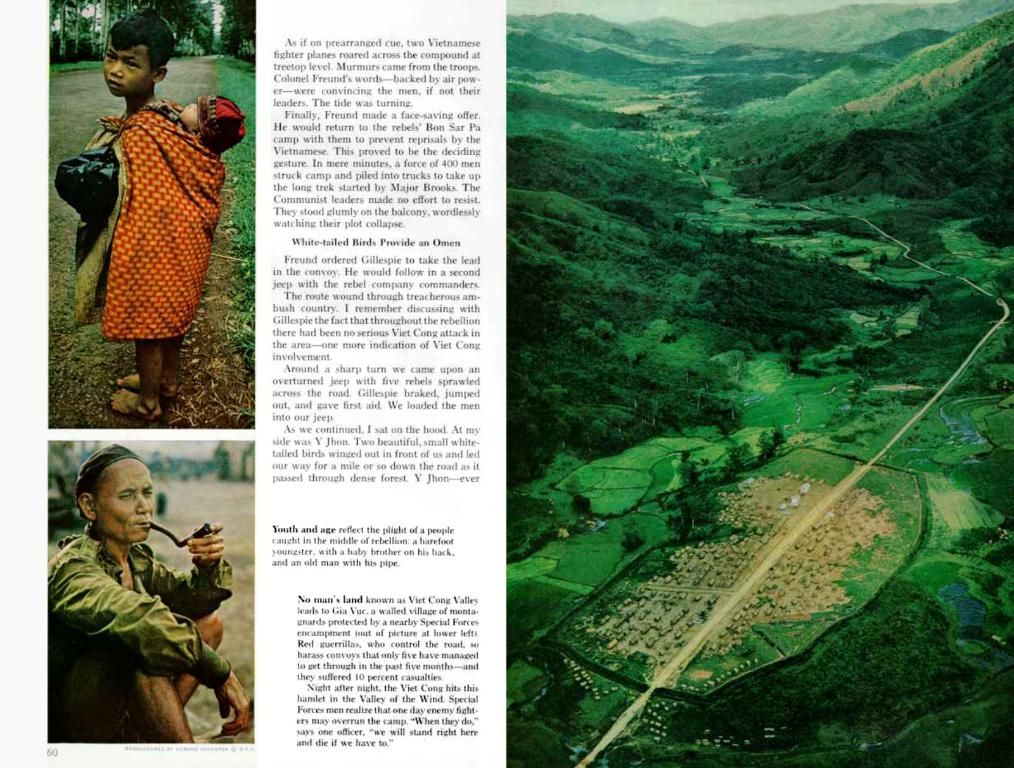

- The scientists from Humboldt University, Berlin, have advanced deepfake technology by creating digital human twins that can simulate realistic heartbeats and changes in facial color, using electrocardiography and the frequency of pulses detected from webcam videos.

- The application of deepfake technology in healthcare could provide a novel approach for remote patient monitoring using webcams, but it also raises concerns about the potential for creating fraudulent medical imagery or AI-generated misinformation.

- In the realm of finance and social media, enhancing deepfake detection is vital to prevent identity verification breaches and impersonation, given the rising realism of deepfakes.

- Efforts to counter the risks associated with deepfakes include the development of robust detection tools and legislative acts such as the Tools to Address Known Exploitation by Immobilizing Technological Deepfakes on Websites and Networks Act, the intimate deepfakes bill, and the EU’s AI Act, which underscore accountability for high-risk AI applications.