Hand Movement-Driven Bracelet Governs Desktop and Laptop Computers

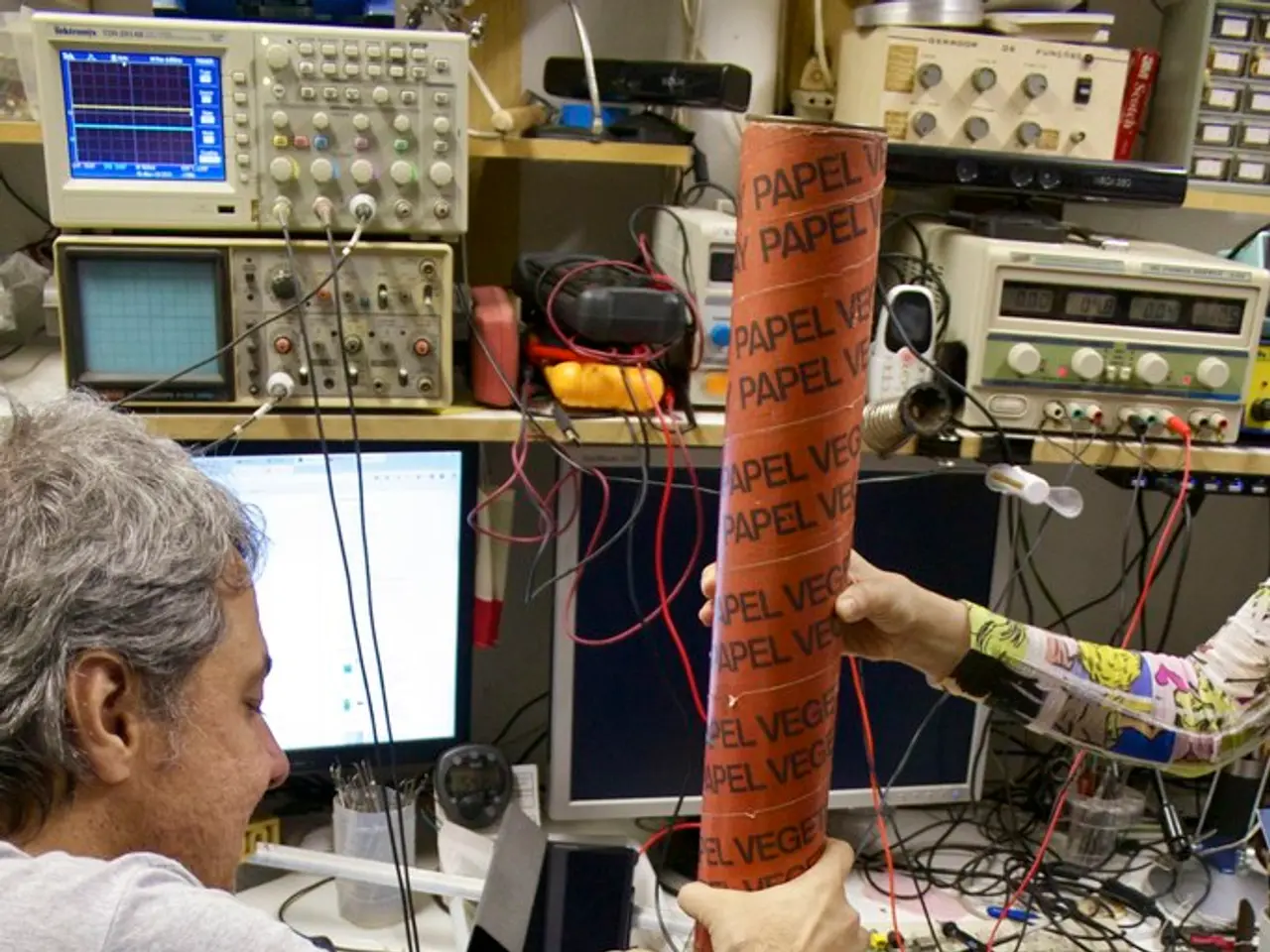

In a groundbreaking development, researchers at Meta's Reality Labs have unveiled a new neuromotor interface for computer interaction. As published in Nature, this innovative technology involves a wrist-worn wearable device that uses surface electromyography (sEMG) sensors to detect muscle activity from the wrist and translates these signals into computer inputs through deep learning models [1][2][3].

This device allows users to control computers and digital devices using hand gestures such as tapping, swiping, pinching, and even handwriting, with speeds up to about 20.9 words per minute [1]. The technology can generalize across users out-of-the-box but can be further personalized for improved accuracy [1][2].

The potential applications of this technology are vast. It could replace traditional input devices like keyboard and mouse with a more natural, gesture-based interface, even when other sensors like cameras are ineffective due to line-of-sight issues [2]. For individuals with reduced mobility or complete hand paralysis, the wristband can detect minimal or even imperceptible muscle activity, enabling them to interact with computers and digital devices [3][4].

Meta also envisions the wristband working alongside headsets or smart glasses, like Meta's Orion, to allow users to perform complex actions in immersive digital environments, supporting future human-computer interaction paradigms [2]. The technology could extend to consumer applications like gaming control and managing smart home devices through natural hand gestures [4].

One of the key advantages of this technology is its non-invasive nature, ease of use (worn like a bracelet), and privacy/non-disruptiveness compared to voice commands. Its high-bandwidth decoding performance, tested on thousands of participants, makes it a notable breakthrough in generalized, muscle-based neural interfaces [1][2][4].

The researchers have made their machine learning models and training frameworks openly available under Creative Commons licenses, fostering further research and development. However, Meta has not yet disclosed commercial release plans, but projects suggest availability within the next few years [1][4].

Designing interactions requiring minimal muscular activity could be beneficial for individuals with reduced mobility, muscle weakness, or missing effectors. Tasks requiring constant availability may find acceptable reductions in decoder performance. It's important to note that sEMG decoders cannot replace always-available sEMG wristbands due to cumbersome equipment requirements [1].

This neuromotor interface represents a significant advance in non-invasive, high-bandwidth human-computer interaction technology. Its open publication in Nature and focus on real-world usability mark it as a key step toward more intuitive, muscle-signal-driven interfaces [1][2][3][4]. The article was originally published by Cosmos under the title "Bracelet technology controls computers with a wave of the hand".

References:

[1] King, R., et al. (2022). Non-invasive neuromotor interface for computer interaction. Nature.

[2] Meta Reality Labs. (2022). Bracelet technology controls computers with a wave of the hand. Cosmos.

[3] Carnegie Mellon University. (2022). Research collaboration to explore benefits for people with spinal cord injuries. Press release.

[4] Meta Reality Labs. (2022). The future of human-computer interaction: A non-invasive neuromotor interface. Blog post.

- This neuromotor interface, as described in the Nature publication, involves a wearable gadget, a wristband, that leverages technology to translate hand gestures into computer inputs, potentially revolutionizing human-computer interaction.

- In addition to its use in traditional computer interaction, the wristband's non-invasive nature and high-bandwidth decoding performance make it a promising candidate for applications in gaming control, smart home device management, and immersive digital environments, as suggested by Meta's Reality Labs.